Compute Express Link

Important

This feature might not be applicable to all Platforms. Please check individual Platform pages, section Supported Features to confirm if this feature is listed as supported.

Compute Express Link (CXL) is an open standard interconnection for high-speed central processing unit (CPU)-to-device and CPU-to-memory, designed to accelerate next-generation data center performance. CXL is built on the PCI Express (PCIe) physical and electrical interface with protocols in three key areas: input/output (I/O), memory, and cache coherence.

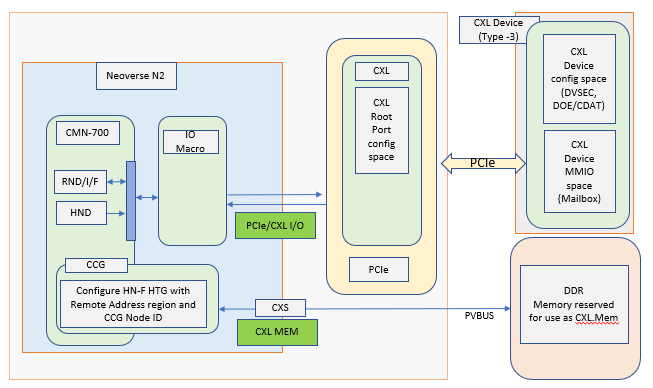

Fig. 3 CXL Type-3 device modeled on Neoverse N2 reference design platform.

This document explains CXL 2.0 Type-3 device (Memory expander) handling on Neoverse N2 reference design platform. At present, CXL support has been verified on ‘rdn2cfg1’ platform. CXL Type-3 device supports CXL.io and CXL.mem protocol and acts as a Memory expander to the Host SOC.

CXL Software Overview

System Control Processor (SCP) firmware

At Host address space 8GB address space, starting at, 3FE_0000_0000h is reserved for CXL Memory. This address space is part of SCG and configured as Normal cacheable memory region.

CMN-700 is the main interconnect, which will be configured for PCIe enumeration and topology discovery.

pcie_enumeration module performs PCIe enumeration and as part of the enumeration process it is also checked whether a PCIe device supports CXL Extended Capability. pcie_enumeration module invokes CXL module API to determine the same for each of the detected PCIe device.

CXL module will also determine whether CXL device has DOE capability. Once found, execute DOE operations to fetch CDAT structure and understand CXL device memory range supported. DOE operation sequence is implemented following DOE-ECN 12Mar-2020.

Check for CXL object’s DOE busy bit and initiate DOE operation accordingly for fetching CXL CDAT Structures(DSMAS supported at latest FVP model). Read the CXL device DPA base, DPA length from DSMAS structures and save the same into internal Remote Memory software Data Structure.

After completing the enumeration process pcie_enumeration module would invoke CXL module API to map remote CXL memory region into Host address space and do necessary CMN configuration.

Software data structure for remote memory will have information regarding CXL Type-3 Device Physical memory address, size and memory attributes. CXL module would call CMN module API for doing the necessary interconnect configuration.

CMN module configures HN-F Hashed Target Region(HTG) with the address region reserved for Remote CXL Memory usage, based on the discovered remote device memory size. Configured HN-F CCG SA node IDs and CXL.Mem region in HNF-SAM HTG in following order-

HNF_SAM_CCG_SA_NODEID_REG HNF_SAM_HTG_CFG3_MEMREGION HNF_SAM_HTG_CFG2_MEMREGION HNF_SAM_HTG_CFG1_MEMREGIONProgram por_ccg_ra_sam_addr_region_reg. with target HAID, host memory base address and size for accessing remote CXL memory.

EDK2 Platform

A new CXL.Dxe is introduced that looks for PCIe device with CXL and DOE capability. This discovery process begins based on notification received on installation of gEfiPciEnumerationCompleteProtocolGuid.

It first looks for PCIe devices with extended capability and then check whether the device supports DOE. If DOE operation is supported then send DOE command and get remote memory details in the form of CDAT tables (DSMAS). The operation is similar to what is done in SCP firmware, that’s explained above.

After enumerating complete PCIe topology, all remote memory node details will be stored in local data structure and CXLPlatformProtocol interface will be installed.

ACPITableGenerator module dynamically prepares ACPI tables. It will use CXLPlatformProtocol interfaces and get the previously discovered remote CXL memory details. It would prepare SRAT table with both Local memory, remote CXL memory nodes, along with other necessary details.

Prepare HMAT table with required proximity, latency info.

The remote CXL memory will be represented to kernel as Memory only NUMA node.

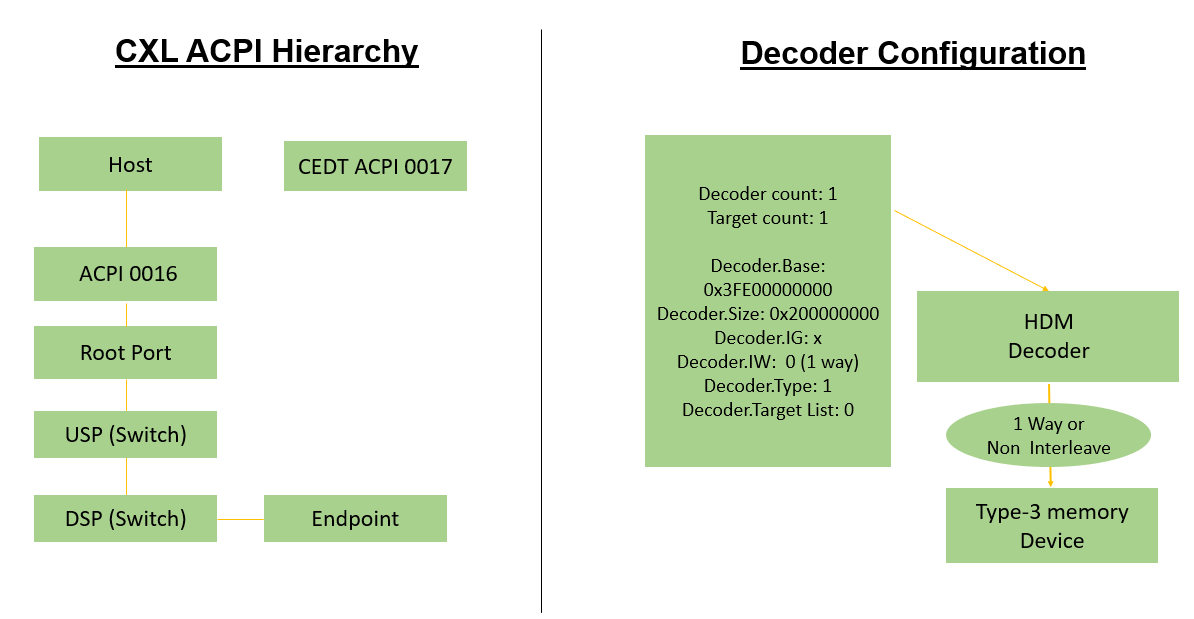

Also, CEDT structures, CHBS and CFMWS are created and passed to kernel. In CFMWS structure, Interleave target number is considered 1 for demonstrating a reference solution with CEDT structures in the absence of interleaving capability in current FVP model. There is no real interleaving address windows across multiple ports with this configuration. It is same as single port CXL Host bridge.

ACPI0016 and ACPI0017 objects are created using PcieAcpiTableGenerator.Dxe at runtime and passed to kernel. ACPI0016 would indicated the presence of CXL Host bridge and ACPI0017 would correspond to CMFWS and CHBS structures.

Kernel

All firmware work is validated using CXL framework present in Kernel.

CXL with CEDT and Decoder configuration

Download and build the required platform software

For downloading and building the platform firmware, refer Buildroot boot or Busybox Boot. Any other boot mechanism, like Distro boot may also be fine for CXL capability test.

Ensure that the model parameter “-C pcie_group_0.pciex16.pcie_rc.add_cxl_type3_device_to_default_hierarchy=true” is present in “rdinfra/platforms/<rd platform>/run_model.sh”

Validating CXL capabilities in Kernel

In following explanation, ‘buildroot’ boot is taken as an example. With buildroot there are more utility options available.

Boot the platform to buildroot command line prompt.

Run the command ‘lspci -k’, which will list out the all PCIe devices and associated kernel driver. Showing below, the output for CXL device. Please note that BDF position of CXL device may vary based on the PCIE topology of the model.

00:18.0 Memory controller [0502]: ARM Device ff82 (rev 0f) Subsystem: ARM Device 000f Kernel driver in use: cxl_pci

One point to note here that ensure CXL is enabled in kernel ‘defconfig’.

CONFIG_CXL_BUS=y CONFIG_CXL_MEM_RAW_COMMANDS=y

As a next command to check the capabilities of CXL device, execute ‘lspci -vv -s 00:18.0’, which would display following output.

00:18.0 Memory controller [0502]: ARM Device ff82 (rev 0f) (prog-if 10) Subsystem: ARM Device 000f Control: I/O- Mem+ BusMaster- SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx- Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx- IOMMU group: 10 Region 0: Memory at 60800000 (32-bit, non-prefetchable) [size=64K] Capabilities: [40] Power Management version 1 Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot+,D3cold-) Status: D0 NoSoftRst- PME-Enable- DSel=0 DScale=0 PME- .... Capabilities: [118 v1] Extended Capability ID 0x2e Capabilities: [130 v1] Designated Vendor-Specific: Vendor=1e98 ID=0000 Rev=1 Len=40: CXL CXLCap: Cache- IO+ Mem+ Mem HW Init- HDMCount 1 Viral- CXLCtl: Cache- IO+ Mem- Cache SF Cov 0 Cache SF Gran 0 Cache Clean- Viral- CXLSta: Viral- Capabilities: [158 v1] Designated Vendor-Specific: Vendor=1e98 ID=0008 Rev=0 Len=20 <?> Kernel driver in use: cxl_pciFor checking the CXL device memory capabilities NUMA utilities can be used. Enable NUMACTL package in buildroot ‘defconfig’.

For example, in 'configs/rdn2cfg1/buildroot/aarch64_rdinfra_defconfig' enable 'BR2_PACKAGE_NUMACTL=y'

With NUMA utilities available in buildroot, execute command ‘numactl -H’, which would show all the available NUMA nodes and it’s capacities.

numactl -H available: 2 nodes (0-1) node 0 cpus: 0 1 2 3 4 5 6 7 node 0 size: 7930 MB node 0 free: 7824 MB node 1 cpus: node 1 size: 8031 MB node 1 free: 8010 MB node distances: node 0 1 0: 10 20 1: 20 10

Here it shows that Node-1(CXL device) has memory capacity of 8031MB, which adds to the total available memory for the system. This extended memory regions is available for kernel usage, which can be verified using NUMA utilities ‘numademo’, ‘numastat’.

#numastat -n Per-node numastat info (in MBs): Node 0 Node 1 Total --------------- --------------- --------------- Numa_Hit 215.21 84.72 299.93 Numa_Miss 0.00 0.00 0.00 Numa_Foreign 0.00 0.00 0.00 Interleave_Hit 25.98 26.68 52.66 Local_Node 215.21 0.00 215.21 Other_Node 0.00 84.72 84.72

If NUMA utilities are not present then CXL device memory information can be verified using numa node1 sysfs entries.

[ceoss@localhost ~]$ cat /sys/devices/system/node/node1/meminfo Node 1 MemTotal: 8224032 kB Node 1 MemFree: 8203836 kB Node 1 MemUsed: 20196 kB Node 1 Active: 0 kB Node 1 Inactive: 0 kB ... Node 1 KReclaimable: 2180 kB Node 1 Slab: 6060 kB Node 1 SReclaimable: 2180 kB Node 1 SUnreclaim: 3880 kB Node 1 HugePages_Total: 0 Node 1 HugePages_Free: 0 Node 1 HugePages_Surp: 0

Above examples demonstrate how CXL Type-3 device is used as Memory expander and the device memory region can be utilized by kernel.

CEDT and CXL ACPI configuration in Kernel sysfs

Checking CXL mem device size through CXL sysfs interface. (Showing the CXL.Mem device size 8GB)

# cat /sys/bus/cxl/devices/mem0/ram/size 0x200000000

CXL Mem device at root device downstream port.

# cat /sys/bus/cxl/devices/root0/dport0/physical_node/0000\:00\:18.0/mem0/ram/size 0x200000000

Decoder configurations passed through CFMWS and seen in kernel.

# cat /sys/bus/cxl/devices/root0/decoder0.0/start 0x3fe00000000 # cat /sys/bus/cxl/devices/root0/decoder0.0/size 0x200000000 # cat /sys/bus/cxl/devices/root0/decoder0.0/target_list 0 # cat /sys/bus/cxl/devices/root0/decoder0.0/interleave_ways 1